Why software developers hate AI

Understanding the Friction Between LLMs and Traditional Software

Recently, I've had some thought-provoking conversations with software engineering ex-colleagues from the pre-transformers era. My ex-colleagues form colourful tribes matching the twists and turns of my career and I was fascinated that these esteemed technologists recognised the current AI wave to be as significant as The Internet, but seem indifferent or hostile towards AI developments.

A possible reason could be that AI threatens the economics of artisans in many domains, including software. AI models are trained on their work and outcompete the authors in the economic battlefield of lowest bidder for a barely-good-enough product, devoid of the deep quality infused by craftspeople.

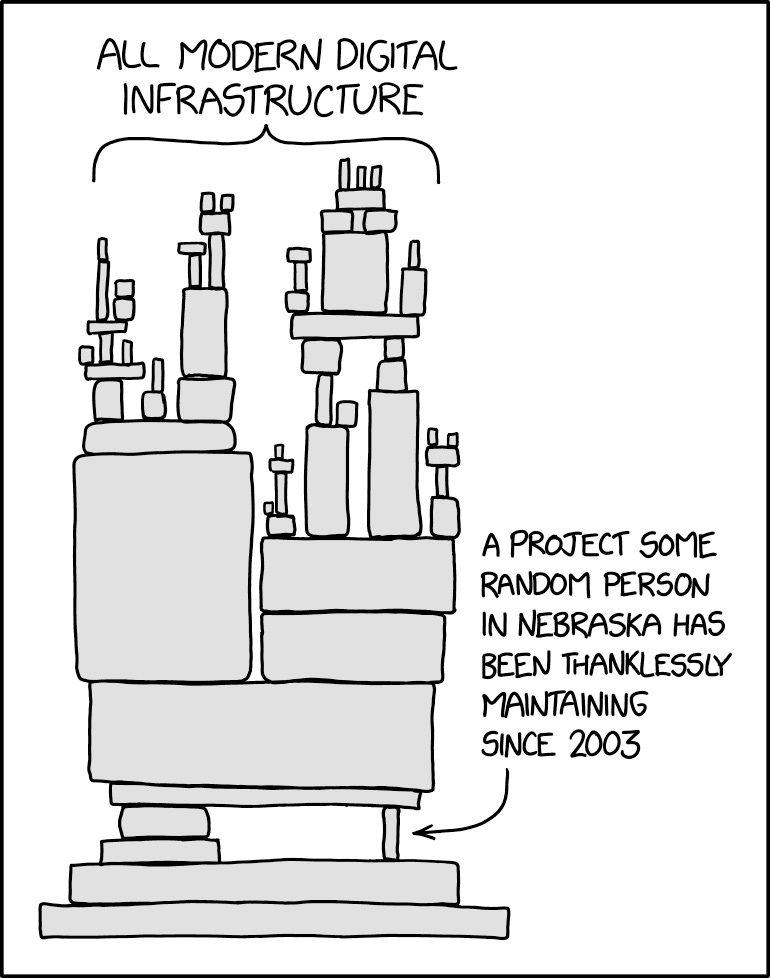

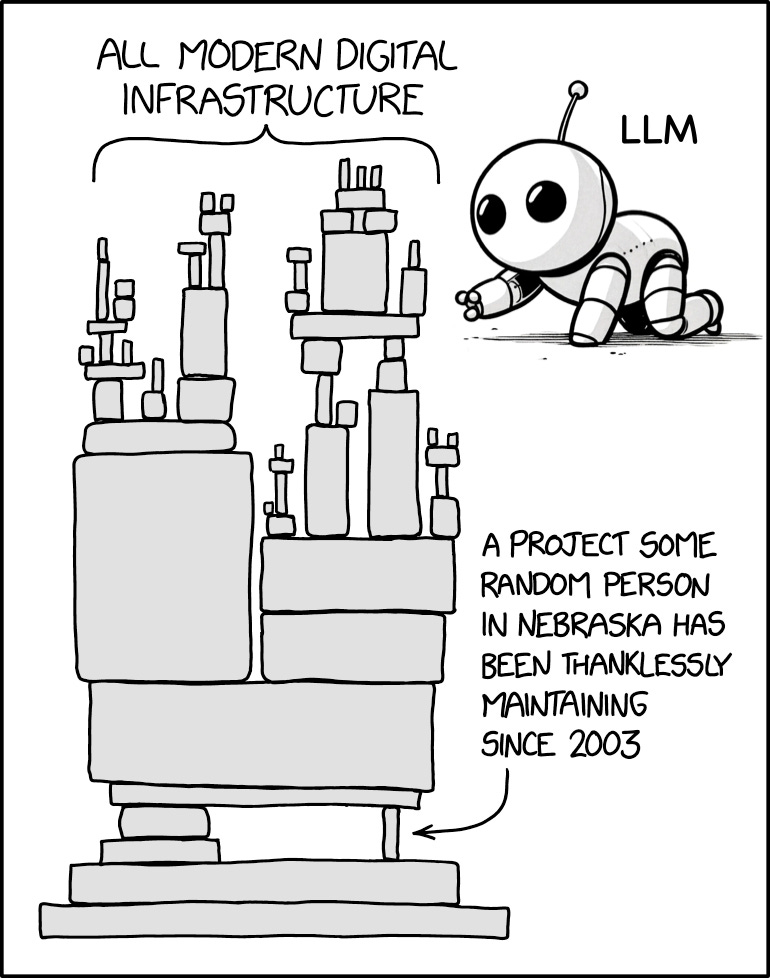

There’s another deeper reason why the seasoned technologists in my network eschew AI. The large software systems we construct stack many abstraction layers, like applications, libraries, all the way down to transistors and physics. This teetering tower holds balance only because the abstraction layers follow agreements at their boundaries. The large language models (LLMs) rapidly changing the world don’t follow the same agreements.

For me, part of the magic of LLMs (like ChatGPT) is how well they “do what I mean, not what I say”. Being able to tolerate misspellings and badly formed inputs is immensely powerful when dealing with human language. As their use in every domain grows, LLMs are increasingly called upon to produce not just human language but structured data formats with strict grammar. Just like they sometimes get facts about the world wrong, LLMs sometimes fail to adhere to those strict grammars. This poses a problem at the interface with traditional software systems, because those expect the rules to be followed, like all agreements at abstraction boundaries.

Various strategies are being used to overcome this mismatch when LLMs produce malformed structured output. A popular way is to retry and hope for a correct result on the next attempt. Another strategy is to perform sloppy parsing when the correct result can be inferred from context. These strategies offend the sensibilities of traditional software practitioners, who interpret probabilistic behaviour of LLMs as an uncorrectable, intermittent fault. The real fault is our expectation to receive perfectly structured output from a probabilistic model.

The mismatch between LLMs and traditional software needs a more convincing solution for my ex-colleagues to get on board, because they care more about the long-term sturdiness of the abstraction towers we depend on. For that to happen, traditional software either needs to get better at dealing with the messiness of LLMs, or LLMs need to get better at conforming to strict constraints.